Fractal Fract, Free Full-Text

Por um escritor misterioso

Last updated 16 novembro 2024

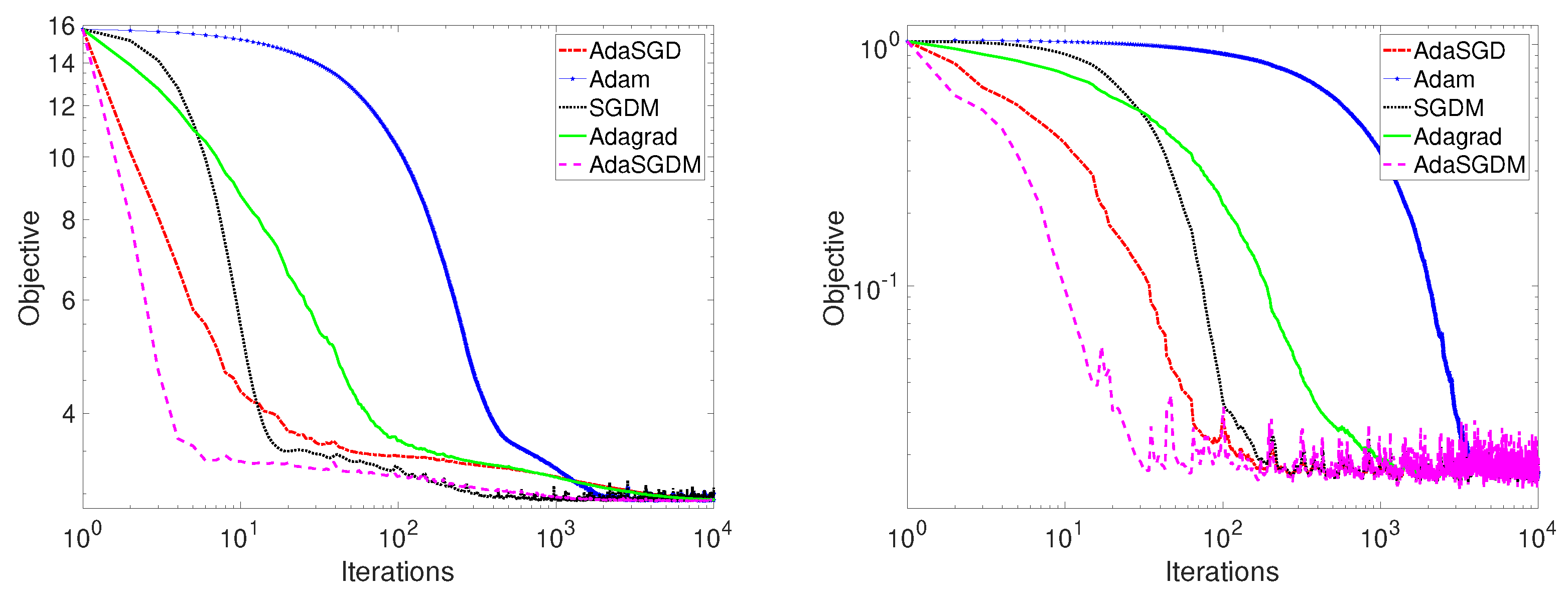

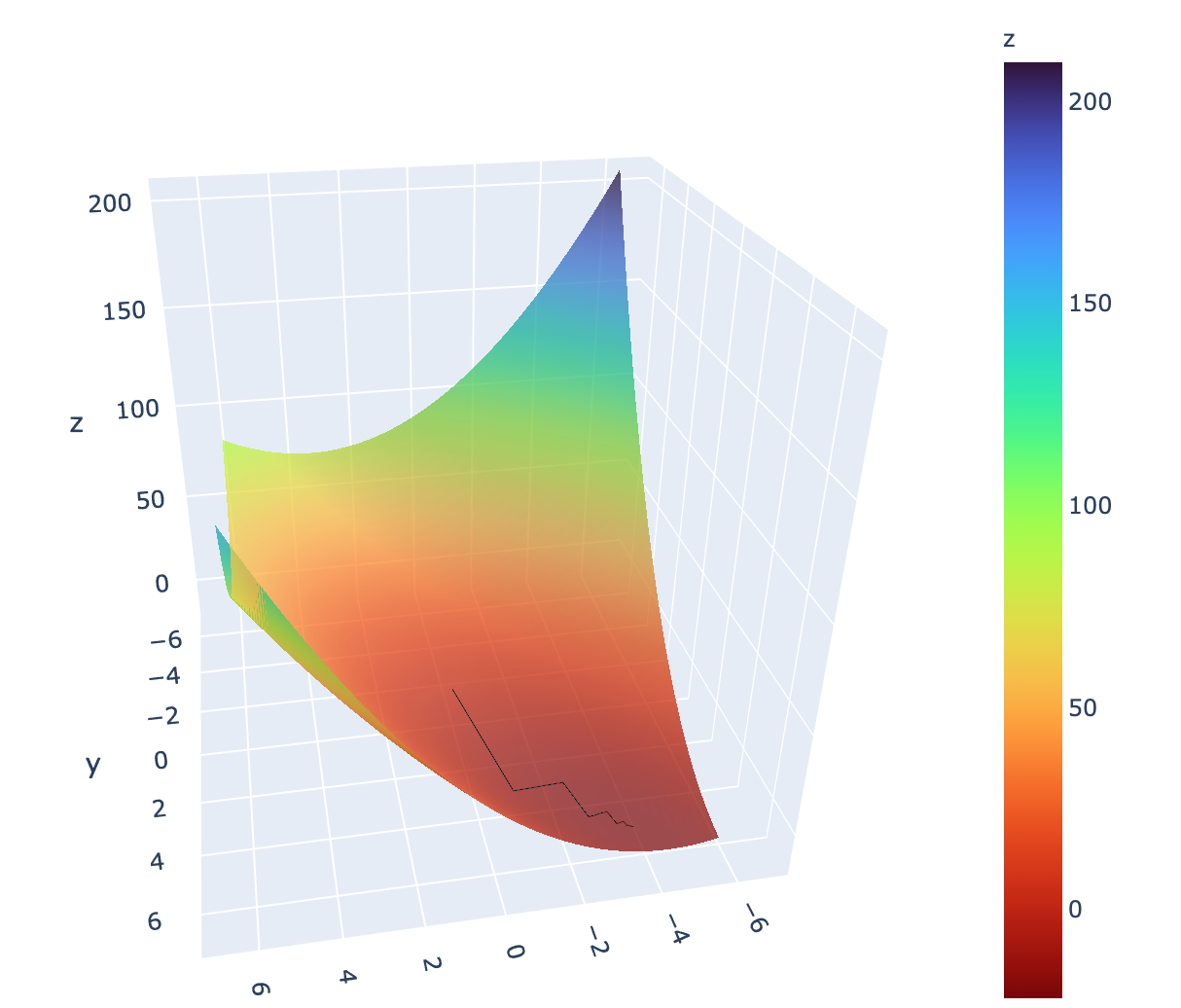

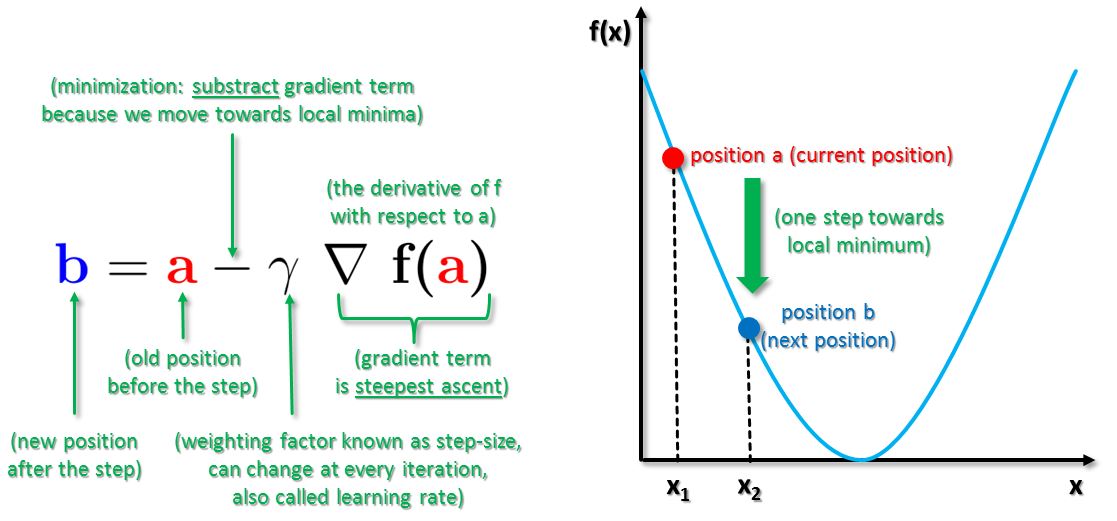

Stochastic gradient descent is the method of choice for solving large-scale optimization problems in machine learning. However, the question of how to effectively select the step-sizes in stochastic gradient descent methods is challenging, and can greatly influence the performance of stochastic gradient descent algorithms. In this paper, we propose a class of faster adaptive gradient descent methods, named AdaSGD, for solving both the convex and non-convex optimization problems. The novelty of this method is that it uses a new adaptive step size that depends on the expectation of the past stochastic gradient and its second moment, which makes it efficient and scalable for big data and high parameter dimensions. We show theoretically that the proposed AdaSGD algorithm has a convergence rate of O(1/T) in both convex and non-convex settings, where T is the maximum number of iterations. In addition, we extend the proposed AdaSGD to the case of momentum and obtain the same convergence rate for AdaSGD with momentum. To illustrate our theoretical results, several numerical experiments for solving problems arising in machine learning are made to verify the promise of the proposed method.

On the fractal patterns of language structures

A Trader's Guide to Using Fractals

Ultra high resolution mandelbrot fractal - 416 (1890127)

Rybka 1.0 Get File - Colaboratory

:max_bytes(150000):strip_icc()/ATradersGuidetoUsingFractals3-8cd6ac59b8e142a8a28ba8cb42ea397d.png)

A Trader's Guide to Using Fractals

Muller Free Energy Generator Review - Colaboratory

Applied Sciences, Free Full-Text, 3D-Printed Super-Wideband Spidron Fractal Cube Antenna with Laminated Copper

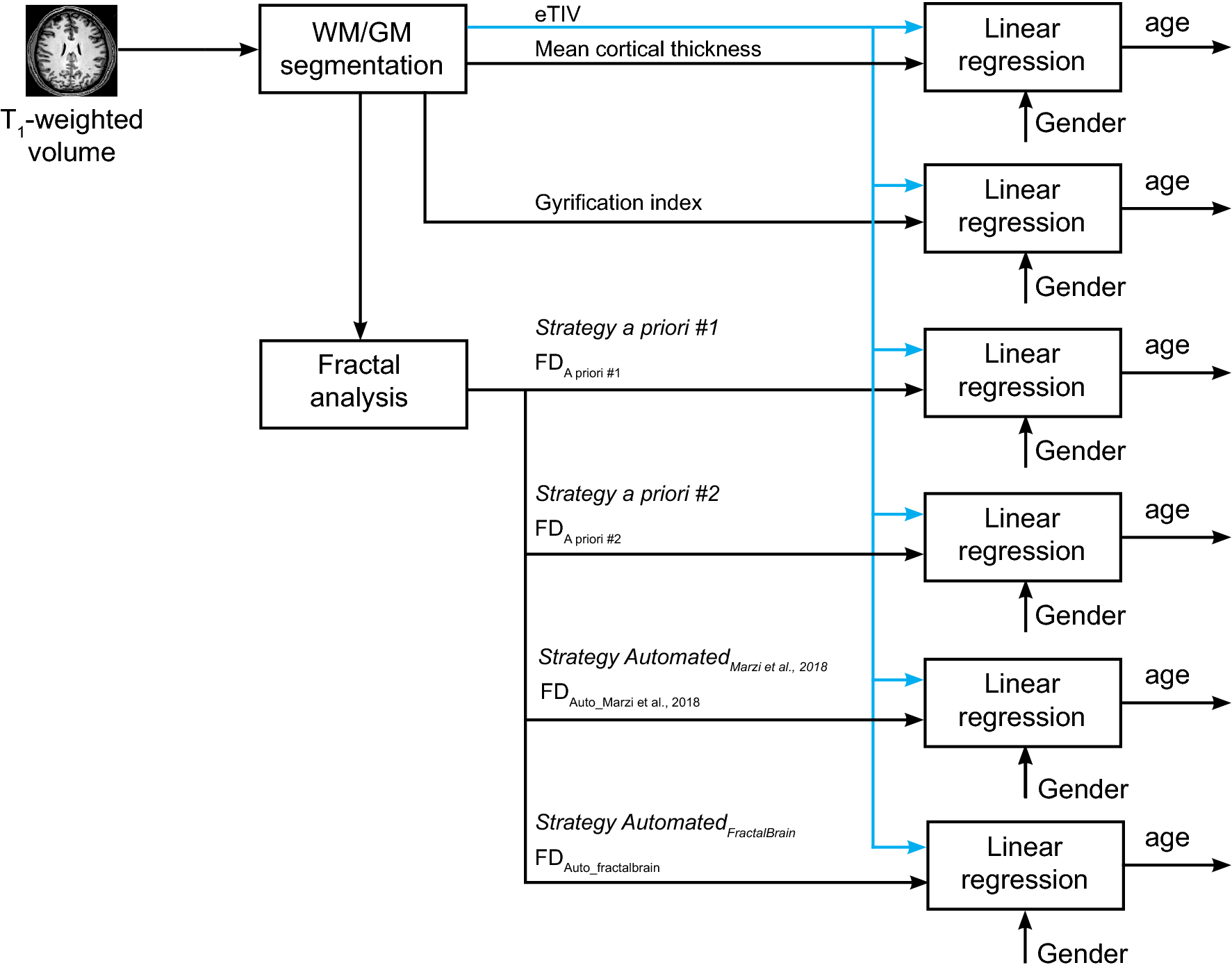

Toward a more reliable characterization of fractal properties of the cerebral cortex of healthy subjects during the lifespan

The Pattern Inside the Pattern: Fractals, the Hidden Order Beneath Chaos, and the Story of the Refugee Who Revolutionized the Mathematics of Reality – The Marginalian

Fractals and Support and Resistance

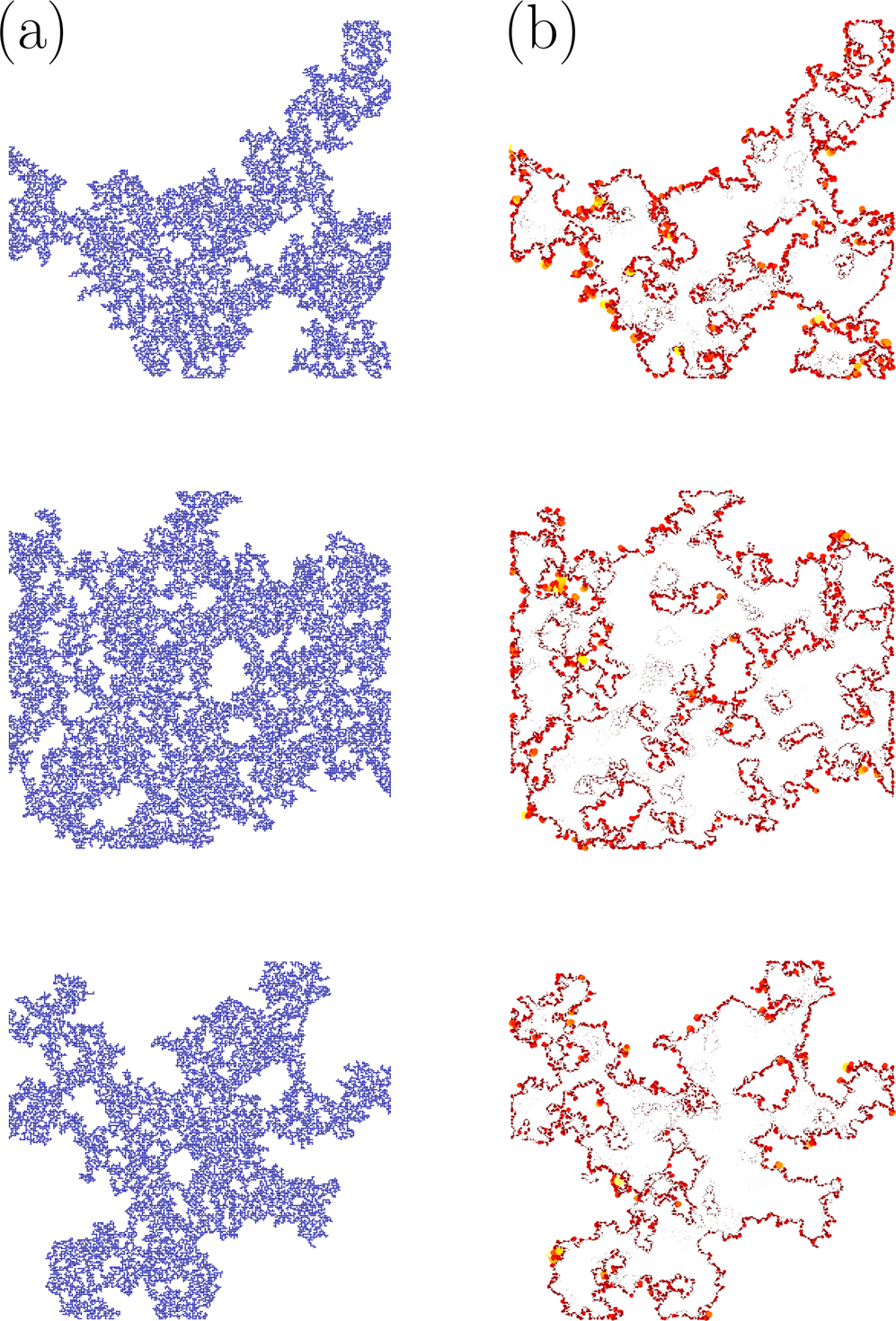

Topological random fractals

Recomendado para você

-

The steepest descent algorithm.16 novembro 2024

The steepest descent algorithm.16 novembro 2024 -

Mod-06 Lec-13 Steepest Descent Method16 novembro 2024

Mod-06 Lec-13 Steepest Descent Method16 novembro 2024 -

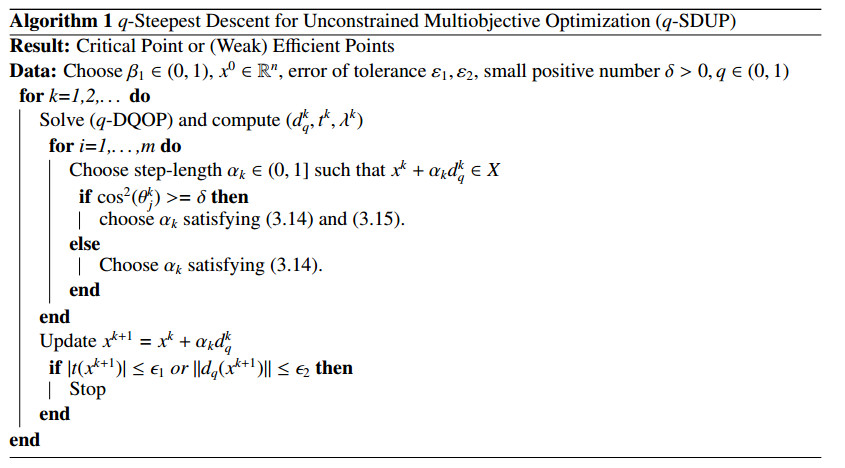

On q-steepest descent method for unconstrained multiobjective optimization problems16 novembro 2024

On q-steepest descent method for unconstrained multiobjective optimization problems16 novembro 2024 -

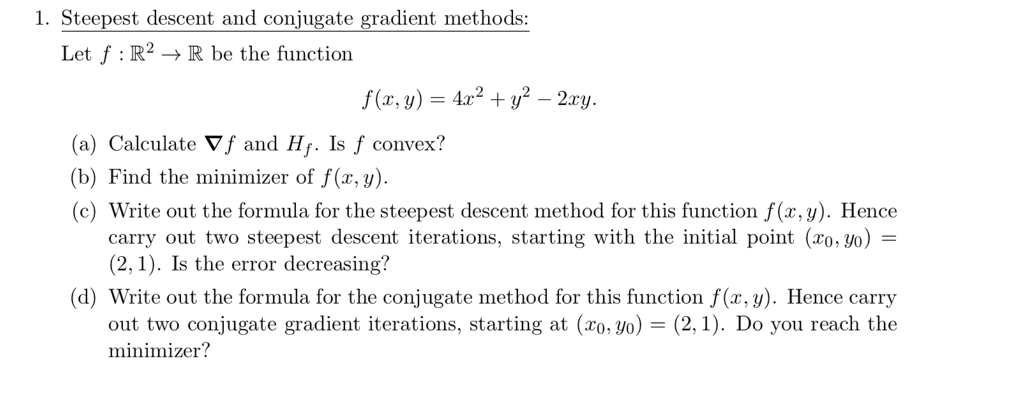

Solved 1. Steepest descent and conjugate gradient methods16 novembro 2024

Solved 1. Steepest descent and conjugate gradient methods16 novembro 2024 -

Steepest descent method16 novembro 2024

Steepest descent method16 novembro 2024 -

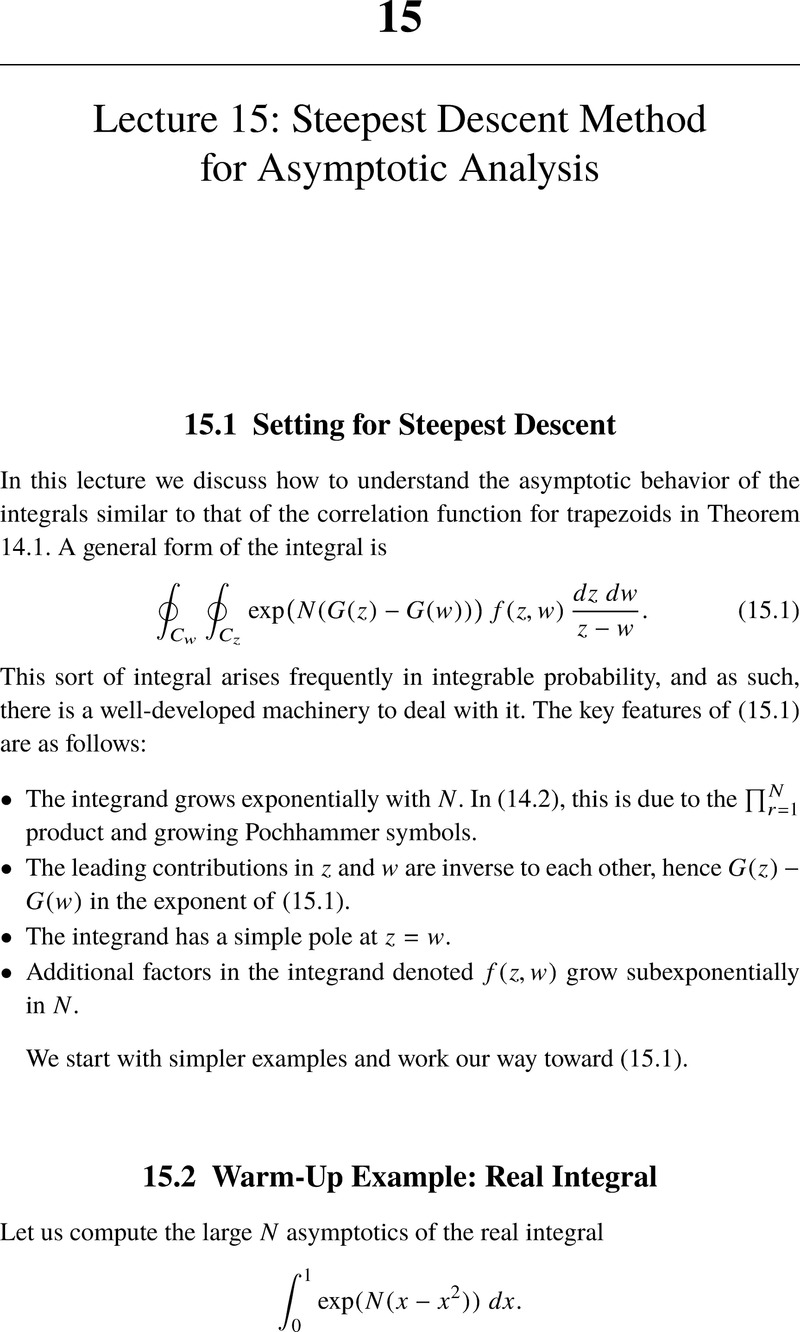

Lecture 15: Steepest Descent Method for Asymptotic Analysis (Chapter 15) - Lectures on Random Lozenge Tilings16 novembro 2024

Lecture 15: Steepest Descent Method for Asymptotic Analysis (Chapter 15) - Lectures on Random Lozenge Tilings16 novembro 2024 -

Descent method — Steepest descent and conjugate gradient in Python, by Sophia Yang, Ph.D.16 novembro 2024

Descent method — Steepest descent and conjugate gradient in Python, by Sophia Yang, Ph.D.16 novembro 2024 -

.png) A Beginners Guide to Gradient Descent Algorithm for Data Scientists!16 novembro 2024

A Beginners Guide to Gradient Descent Algorithm for Data Scientists!16 novembro 2024 -

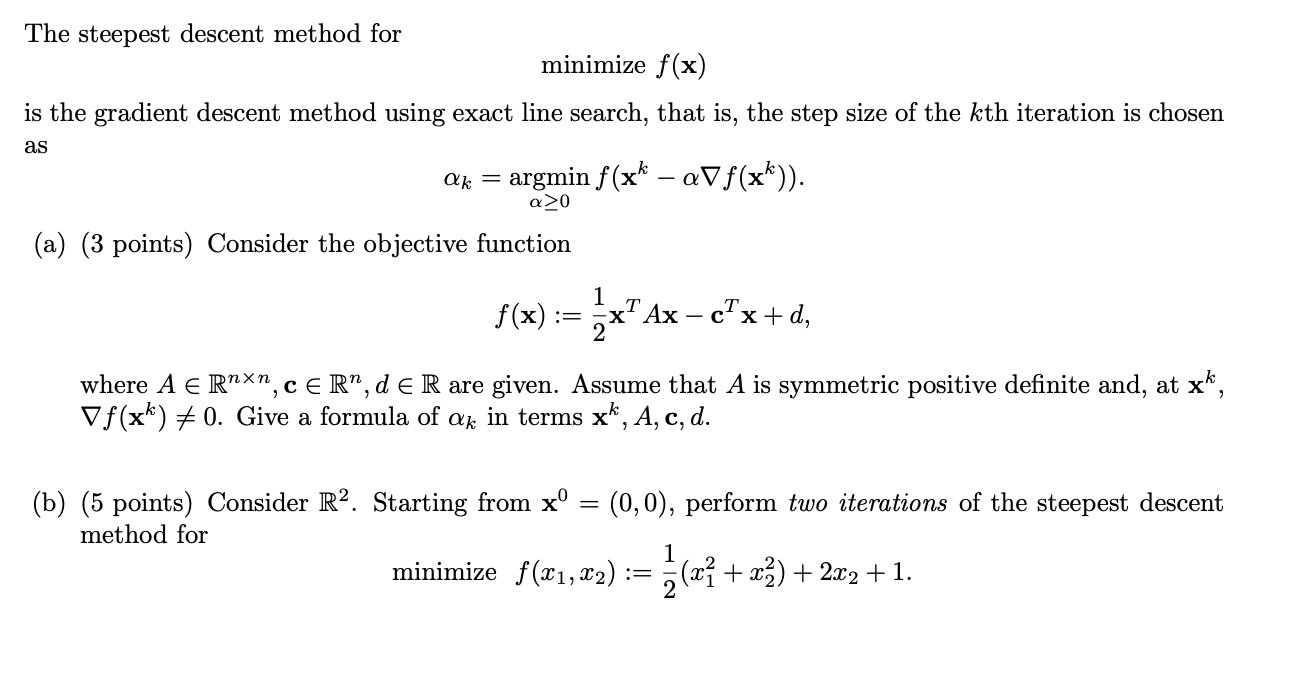

Solved The steepest descent method for minimize f(x) is the16 novembro 2024

Solved The steepest descent method for minimize f(x) is the16 novembro 2024 -

Gradient Descent Big Data Mining & Machine Learning16 novembro 2024

Gradient Descent Big Data Mining & Machine Learning16 novembro 2024

você pode gostar

-

RRQ Pearl KAYO Fade Mid Combo #kayo #kayovalorant #fade16 novembro 2024

-

the rock face emoji meme q:) - Desenho de sapolacio - Gartic16 novembro 2024

the rock face emoji meme q:) - Desenho de sapolacio - Gartic16 novembro 2024 -

HOLLYWOOD, CA - FEBRUARY 05: Thomas Mitchell Barnet attends Netflix s Locke & Key Series Premiere16 novembro 2024

HOLLYWOOD, CA - FEBRUARY 05: Thomas Mitchell Barnet attends Netflix s Locke & Key Series Premiere16 novembro 2024 -

Stract on Music Unlimited16 novembro 2024

Stract on Music Unlimited16 novembro 2024 -

My Sacrifice - Creed - VAGALUME16 novembro 2024

My Sacrifice - Creed - VAGALUME16 novembro 2024 -

That's My Car - Song Download from Back 2 the Game @ JioSaavn16 novembro 2024

That's My Car - Song Download from Back 2 the Game @ JioSaavn16 novembro 2024 -

Trollhunters: Guillermo del Toro is incredible to work with - SciFiNow - Science Fiction, Fantasy and Horror16 novembro 2024

Trollhunters: Guillermo del Toro is incredible to work with - SciFiNow - Science Fiction, Fantasy and Horror16 novembro 2024 -

ippo makunouchi Anime, Anime artwork, Manga art16 novembro 2024

ippo makunouchi Anime, Anime artwork, Manga art16 novembro 2024 -

See the video on @Jess's page to what she looked like before I16 novembro 2024

-

Giga Chad Drawing16 novembro 2024

Giga Chad Drawing16 novembro 2024